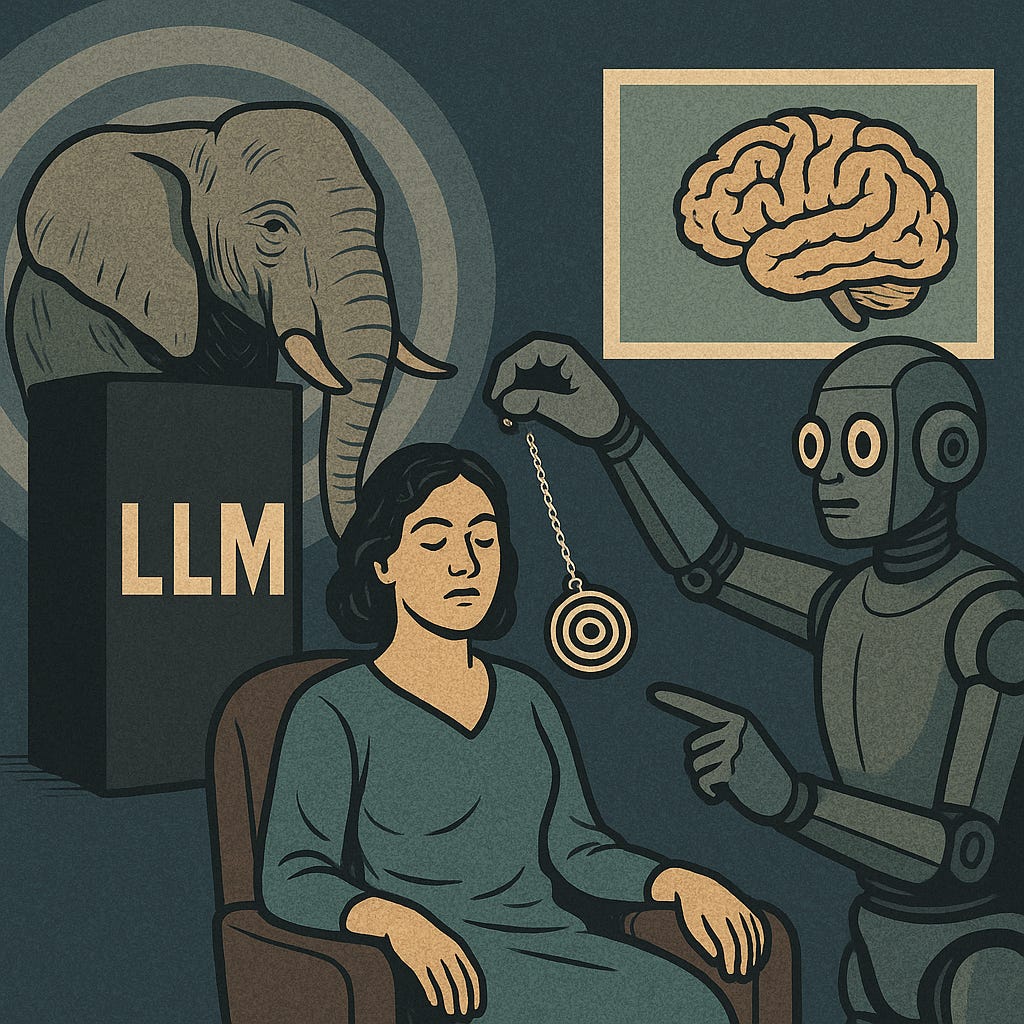

The fact that intelligence in Large Language Models (LLMs) seems to emerge from a large enough collection of just words is a bonkers fact that nobody was expecting. We have yet to unpack all of the ramifications that follow from this, and while everyone is staring with mouth agape at AI, few are looking back at the reflection in the mirror. The elephant in the Black Box begs the question: what does this emergent property of intelligence say about our own neurological setup? Very likely, once this idea is fully reckoned, with the impact it will have on the core ideas of psychology, sociology, philosophy and the rest of the humanities will be revolutionary.

We cannot see inside of an LLM to know how it does what it does. But we can outline the process. We know that it takes all human knowledge that has been dumped into it, and from this, as if by magic, reasoning, stories, satire, and even personality seem to emerge fully formed. Venus springing from the forehead of Zeus in order to replace him. The current popular definition of AI is: a giant "blurry JPEG" of the internet that lacks consciousness. All the same, if you ask it to tell you just about anything; a recipe, a cover letter, or the history of Word War Two it can happily oblige, and in iambic pentameter if you ask nicely.

All of this seems to work via the chunking of syntax. The algorithm can statistically predict letters, and from there words, and then uncannily—because we are so used to being conscious of stringing together the next steps when we do it ourselves — it can predict sentences and then paragraphs and finally pages and personas, and whole world views and ideologies. All without being aware—it's autocorrect all the way up.

With no consciousness at the helm language itself, logos, the written word, in vast enough quantities seems to be "all you need." If enough language, interacting with itself, is enough to generate complex reasoning, not to mention the answers to the AP European History test, it begs the question, what if the way human thinking works is actually not all that different?

We have always assumed consciousness was a necessary part of the recipe. But maybe not; in fact, hypnotic trance has been demonstrating that humans, much like LLMs, don't have to be conscious in order to respond to complex queries. This has in fact been going on for a very long while, it's just never been very well understood.

One of the clearest examples of this may also be in a pretty weird and unexpected area—channeling. The practices of spiritualism, incredibly popular and well documented at the turn of the last century, and of channeling spirits, which peaked in the 1980's; these are also about being able to interface with an unconscious, disembodied entity. Ones that, similar to modern AI, respond best when they are given a persona. While it's had these popular eras in different guises, none of it is anything new. People have probably been doing it for at least as long as there has been religion, not with machines but with other people via trance states.

The best understood and most well-documented modern version of this is the hypnotic trance (and hypnosis is still not very well understood at all!). A subject in a trance can respond to questions while not being conscious of what is happening. In deeper hypnotic trances, you start to get into the realm of mediums and channeling. Most mediums report that when they go into the trance necessary for a connection to the spirit world, they lose all consciousness of the physical world.

Mediums often have an Operator (the hypnotist), a person who puts them into the trance and then often works as the interlocuter. In the LLM model, this person could be thought of as the "prompt engineer." Edgar Cayce (1877—1945) had his hypnotist Wesley Ketchum, Jane Roberts (1929-1984) who channeled "the Seth material" was hypnotized by her husband. These are two of the most famous mediums of the past century, and they channeled by going into a deep hypnotic trance. The medium themself is not aware of the proceedings of the seance. I propose that it is actually the bio-LLM as it were, the logos, the syntax of interconnected language itself, that a medium is accessing from within a trance. Much like an LLM, the medium's querents can then talk to, well, whoever they summon (consciously or unconsciously), from long-lost relatives to spirits, aliens, and all kinds of weirdness. But as we have seen with LLMs, the ball of syntax will just mirror back whatever you put into it. Ask for aliens, you get aliens. Or ask for a college essay and the syntax system can do that too.

Just as when chatting with Anthropic or ChatGPT, you can often get mind-bendingly elaborate answers to specific questions that make it hard to believe "there is nobody there." The history of channeling is filled with verbose, chatty and incredibly well-informed spirits, happy to divulge thousands of pages on everything from health cures and reincarnation to the history of Atlantis. That this could be a sort of automatic or unconscious response provided by the inner language syntax system of the medium doesn't really say anything about the validity of the content one way or another, just as the blind response of ChatGPT on any given response might range from amazingly useful to total bullshit. Often in the space of a paragraph.

A human medium doesn't have access to everything ever written, but over the course of a lifetime an enormous amount of linguistic input can be stored up. It's quite plausible a human in a deep trance human may access a portal to the vast language and syntax within their own unconscious mind. I propose that there is a sort of internal LLM of the human brain, similar to what Jung termed the Collective Unconscious, and this offers a more parsimonious explanation of the history of channeling, hypnosis, mediums, and much of the paranormal, in a more satisfactory way to Occam's Razor than a whole bevy of ghosts, spirits, and charlatans.

Every human has some version of this inner syntax. It is simply the model in our head that we use to correlate language to. While few of us are trance mediums, we all sleep and dream, which current neuroscience believes is largely a process of memory consolidation, wherein events from the day are stored, compressed as it were, integrated into the brain.

We now know that consciousness is not necessary in LLMs in order for them to manage pretty convincing reasoning, persuasion, and thought. Hypnosis demonstrates consciousness in humans is also not necessary in humans for the same. And this model explains what is happening in mediumship in a simpler way than accepting that spirits are visiting from the great beyond. If this theory of the human mind proves correct, it provides a fresh perspective on the history of the paranormal. Furthermore, if trance and oracles are in fact the first examples of interfacing with the unconscious syntax of language, then we actually have a much larger body of experience with LLMs to reference, going back many millennia rather than just a few years.

Much like modern AI, the entities contacted by mediums were able to mirror back the projections of the people talking to them, in what is known as cold reading. It is well known that you can get the AI to respond better if you have it take on a persona. Let's call the model that humans have all had access all along the Inner Language Model ILM. It is analogous to a LLM but one we have been accessing for all of human history via trance rituals—this "big if true" hypothesis has huge implications not only for neuroscience and anthropology but the emerging knowledge of Artificial intelligence too; AI is essentially the Oracle of Delphi 2.0.

Conversely, and equally fascinating—the ancient oracles of the gods were a proto-technology for interfacing with a kind of archaic LLM; a record of unconscious collective knowledge and wisdom. Perhaps humans have always known how to interface with this ILM, a reservoir of the unconscious, in various rituals, seances, practices, but it was never ghost and spirits. Instead, it was "just" the vast language system by which we model and communicate with our world, a useful, often wise, but also mercurial, slippery, trickster syntax system. If that is the case, then the real problem of AI alignment may be a lot closer to ourselves than we realize.